Anthropic released Claude Sonnet 4.6 on 17 February 2026, and the benchmarks tell a story that should interest every business running AI tools. The mid-tier model now matches or beats the flagship Opus 4.6 on several real-world tasks, while costing a fifth of the price. At $3 per million input tokens and $15 per million output tokens, it sits at the same price point as the previous Sonnet 4.5, but the performance gap between "mid-tier" and "flagship" has practically disappeared.

For UK businesses already using Claude for coding, content, or customer workflows, this changes the maths on which model to run. For those still deciding between AI platforms, it makes the decision harder in the best possible way.

What Actually Changed in Claude Sonnet 4.6

Sonnet 4.6 isn't a minor update. Anthropic describes it as "a full upgrade of the model's skills across coding, computer use, long-context reasoning, agent planning, knowledge work, and design." That's a broad claim, but the numbers back it up.

The headline stat: Claude Code users preferred Sonnet 4.6 over its predecessor roughly 70% of the time. More surprising, users preferred it over Opus 4.5 (Anthropic's previous top-tier model from November 2025) 59% of the time. A mid-priced model that most users prefer to last quarter's flagship. That doesn't happen often.

Anthropic's own safety researchers described the model's character as having "a broadly warm, honest, prosocial, and at times funny character, very strong safety behaviours." Prompt injection resistance also improved over Sonnet 4.5, which matters if you're building customer-facing tools.

The Benchmarks That Matter for Business

Raw benchmark numbers can feel abstract, so here's what they mean in practical terms.

Coding (SWE-bench Verified: 79.6%): This tests whether the model can solve real software engineering problems. Sonnet 4.6 scores 79.6%, just behind Opus 4.6 at 80.8% and ahead of GPT-5.2 at 77.0%. For businesses using AI to build or maintain websites, that 2.6 percentage point gap between Sonnet 4.6 and GPT-5.2 translates into fewer bugs and faster development cycles.

Computer Use (OSWorld-Verified: 72.5%): This measures how well the model can operate a computer independently, navigating interfaces, filling forms, and handling multi-step workflows. Sonnet 4.6 scores 72.5%, nearly identical to Opus 4.6's 72.7%. GPT-5.2 trails at 38.2%. That's not a typo. Claude's computer use capability is roughly double what OpenAI offers.

Office Productivity (GDPval-AA Elo: 1633): Here's where it gets interesting. Sonnet 4.6 actually beats the flagship Opus 4.6 (1559 Elo) on real-world office tasks. It also beats GPT-5.2 (1524 Elo). If your team uses AI for spreadsheets, reports, or data analysis, the mid-tier model is the best option available.

Financial Analysis (Finance Agent: 63.3%): Another category where Sonnet 4.6 outperforms Opus 4.6 (62.0%) and GPT-5.2 (60.7%). For businesses that need AI to work with financial data, this is the model to use.

Anthropic also reported 94% accuracy on insurance benchmark testing (Pace), with the model demonstrating "human-level capability in tasks like navigating a complex spreadsheet or filling out a multi-step web form."

What Developers Will Notice

The coding improvements go beyond benchmark scores. Anthropic says Sonnet 4.6 "more effectively reads the context before modifying code and consolidated shared logic rather than duplicating it." In practice, that means fewer broken edits during long coding sessions, less duplicated code, and better understanding of existing patterns before suggesting changes.

The model also supports a 1 million token context window (in beta), which means it can process entire codebases, lengthy contracts, or dozens of research papers in a single conversation. For WordPress hosting clients maintaining complex sites, that's enough context to understand an entire theme, its plugins, and their interactions all at once.

Extended thinking and adaptive thinking modes are both supported, letting developers choose between faster responses and deeper reasoning depending on the task.

"Claude Sonnet 4.6 is our most capable Sonnet model yet, delivering frontier performance across coding, agents, and professional work at scale."

Anthropic, Official Announcement, 17 February 2026

I've been using Claude Code as my primary development tool for months now, and the shift from Sonnet 4.5 to 4.6 was noticeable within the first hour. Fewer times where the model "forgot" what it was working on mid-task. Less code duplication. Better awareness of the broader project structure before making changes. The 70% preference rate among Claude Code users doesn't surprise me at all.

AI That Can Use Your Computer

Computer use is where Claude Sonnet 4.6 pulls furthest ahead of the competition. The ability for an AI to navigate software interfaces, fill in forms, move between applications, and complete multi-step workflows opens up automation possibilities that weren't practical six months ago.

At 72.5% on OSWorld-Verified, Sonnet 4.6 is nearly twice as capable as GPT-5.2 (38.2%) at using computers. For UK businesses, that translates into practical automation: data entry, form filling, report generation, CRM updates, and repetitive admin tasks that currently eat hours each week.

The Vending-Bench Arena results are also worth noting. This tests long-horizon planning (the AI runs a simulated vending business and has to make strategic decisions over time). Sonnet 4.6 earned roughly $5,700 in simulated profit, up from $2,100 for Sonnet 4.5. Opus 4.6 scored $7,400. The mid-tier model is now making strategic decisions at 77% of flagship quality.

"The performance-to-cost ratio of Claude Sonnet 4.6 is extraordinary. It's hard to overstate how fast Claude models have been evolving in recent months."

Simon Willison, Independent Developer and Author, 17 February 2026

Simon Willison's assessment echoes what I've been telling clients: the gap between "best available" and "best value" in AI has collapsed. You don't need the most expensive model to get top-tier results anymore. That's a meaningful shift for small businesses watching their AI spend.

The Pricing That Changes Everything

Claude Sonnet 4.6 costs $3 per million input tokens and $15 per million output tokens. That's identical to Sonnet 4.5. But the performance is now within touching distance of Opus 4.6 ($5/$25 per million tokens) and ahead of GPT-5.2 ($5/$15 per million tokens) on several benchmarks.

| Model | Input (per 1M tokens) | Output (per 1M tokens) | Context Window |

|---|---|---|---|

| Claude Sonnet 4.6 | $3 | $15 | 1M tokens |

| Claude Opus 4.6 | $5 | $25 | 200K tokens |

| GPT-5.2 | $5 | $15 | 2M tokens |

| Gemini 3 Pro | $1.25 | $10 | 2M tokens |

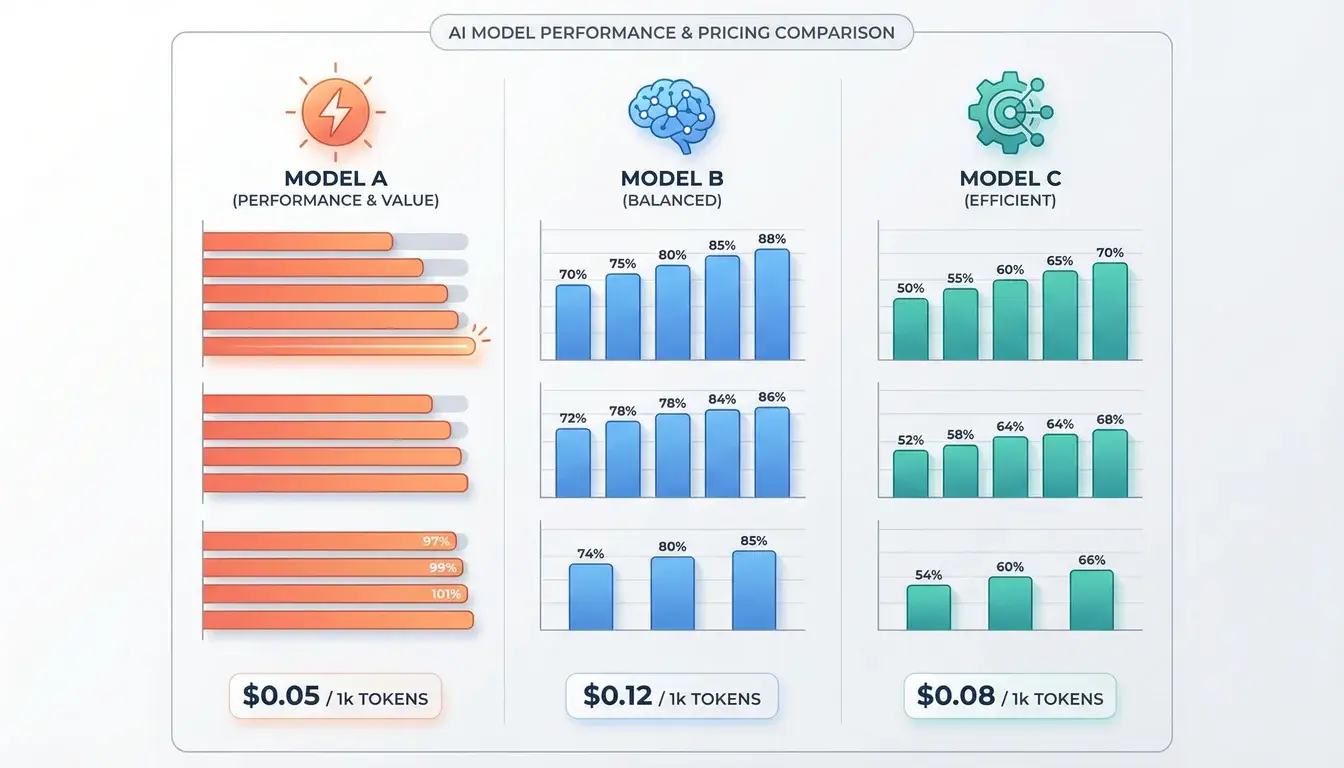

Gemini 3 Pro is cheaper, yes. But it doesn't match Sonnet 4.6 on coding or computer use benchmarks. GPT-5.2 costs 67% more on input tokens for roughly equal or lower performance in most categories. For a detailed breakdown of how all three stack up, we put together a full comparison of Claude Sonnet 4.6 vs GPT-5.2 vs Gemini 3 with benchmark tables and use-case recommendations.

What This Means for UK Businesses

Three things stand out from this release.

First, the "good enough" tier of AI just got much better. Businesses that were using Sonnet 4.5 (or avoiding Opus pricing) can now access near-flagship intelligence without changing their budget. If you're running AI-assisted customer service, content generation, or development workflows, Sonnet 4.6 is an immediate upgrade at no extra cost.

Second, computer use is becoming practical. The 72.5% OSWorld score means AI agents can now handle real business software with reasonable reliability. Not perfectly, but well enough for supervised automation of repetitive tasks. If your team spends hours on data entry, CRM updates, or report compilation, it's worth testing what Claude can automate.

Third, the pricing pressure benefits everyone. When the mid-tier model matches the flagship, it forces the entire industry to deliver more for less. OpenAI and Google will respond. That competition drives down costs and drives up capability for every business using AI tools.

For businesses already investing in AI visibility, this is another signal that AI tools are becoming essential infrastructure, not optional extras. The businesses adopting these tools now will have a meaningful head start over those that wait.

If you're not sure where your business stands with AI, our AI Visibility Checker can show you how AI platforms currently see your website. It's free and takes 30 seconds.

How to Access Claude Sonnet 4.6

Sonnet 4.6 is available now as the default model for all Claude Free and Pro users on claude.ai and Claude Cowork. It's also accessible through the API (model ID: claude-sonnet-4-6), Amazon Bedrock, and Google Cloud Vertex AI.

Anthropic also expanded the free tier to include file creation, connectors, skills, and compaction, meaning free users get more functionality alongside the upgraded model.

For businesses already using the GPT-5.2 API or considering a switch, Sonnet 4.6 offers a straightforward migration path with better coding performance at lower cost.

Frequently Asked Questions

What is Claude Sonnet 4.6?

Is Claude Sonnet 4.6 better than Opus 4.6?

How much does Claude Sonnet 4.6 cost?

How does Claude Sonnet 4.6 compare to GPT-5.2?

What is Claude's computer use capability?

Can I use Claude Sonnet 4.6 for free?

How large is Claude Sonnet 4.6's context window?

Should my business switch to Claude Sonnet 4.6?

Learn more about our WordPress Hosting and WordPress Turbo Hosting services.

Is AI Seeing Your Business?

Claude, ChatGPT, and Gemini are answering questions about your industry right now. Find out if they're recommending you.

Check Your AI Visibility →